Synthetic media is the artificial production, manipulation, or modification of images, video, and audio using artificial intelligence. When used to mislead or deceive, they’re often described as “deepfakes” – the word “deep” stemming from deep learning software.

The underlying technology has many positive applications ranging from adding effects to Hollywood movies, creating lifelike human avatars for customer services, generating fun social media clips, and more. However, their use to create political misinformation, fake celebrity and revenge porn, and even to commit fraud has captured news headlines and raised concerns.

Not all fakes are necessarily deepfakes, but the combination of new AI-based manipulation tools with traditional CGI and special effects can produce very convincing results. Indeed, telling fake from reality can be harder than you think; try MIT’s online research to see how well you can do!

Today, synthetic media technology stands on the brink of not only altering and manipulating existing visual and audio records in a convincing way but also creating entirely synthetic worlds, populated by believable and engaging characters. In this article, we’ll take a closer look at this technology, including its history, the key players pushing it forward, as well as an overview of how deepfakes are made.

A Brief History

The technologies behind synthetic media have been in development since the late 1990s. In 1997, Video Rewrite synthesized new lip movements from a separate audio track to make actors appear to mouth words they didn’t actually say. It was the first significant use of artificial intelligence (AI) to manipulate video in a convincing way.

During the following two decades, huge advances were made in facial recognition and simulation, voice processing, combining CGI with real video, and a host of related technologies. These didn’t necessarily use AI, but they did lay the foundations of techniques that machine learning would then optimize and improve.

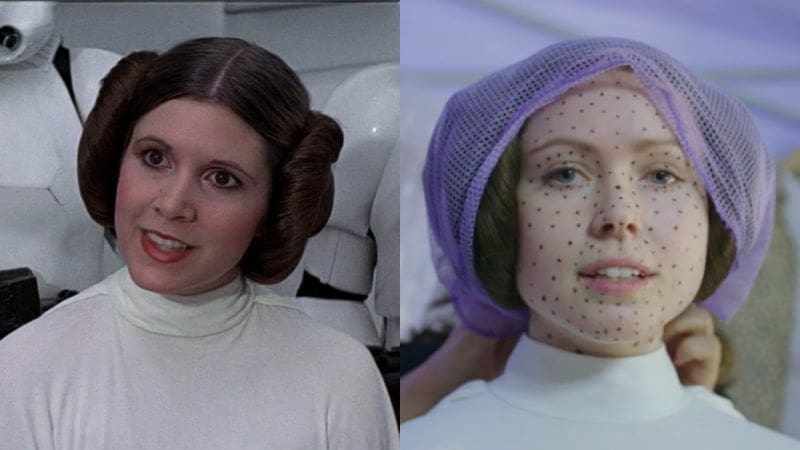

In 2009, Avatar shook the world of filmmaking with its groundbreaking mapping of actors’ faces onto computer-generated graphics. In 2014, a young Audrey Hepburn starred in a revolutionary chocolate commercial. Her face was mapped onto body-double actors using advanced software. In 2019, The Irishman used the most extensive de-aging process seen in a feature-length movie.

These novel instances of synthetic media were impressive, but they relied on relatively slow, complex software and manual intervention. Transformations were usually hardcoded into the computer programs using maker points (often painted onto actors’ faces) to anchor the underlying algorithms.

The Emergence of Deepfakes

Deepfake technology simplifies the transformation process by using neural networks to automatically “learn” the characteristics of anyone’s face (or voice) from hundreds or even thousands of examples. Once the characteristics are learned, the AI applies the transformations automatically and with convincing results.

The technology takes its name from an incident that occurred in 2017. A Reddit user gained notoriety by posting fake celebrity porn that was created by this newer, faster AI-based approach. That user’s name was “Deepfake”, and the rest is history.

In the relatively short intervening period, there has been an explosion of software companies offering solutions to synthetically generate video and audio – not just of people but of entire scenes. YouTube channels dedicated to Deepfakes, such as Shamrock and Ctrl Shift Face, have emerged with large followings. Easy-to-use deepfake apps (see below) are commonplace, and we’ve even seen the huge popularity of entirely synthetic Instagram celebrities like Lil Miquela.

Increasingly realistic deepfakes, with their accompanying controversies and growing numbers of creative practical uses, are set to become a more common feature of life in the 21st century. But who is driving this, where is it used, how does it work, and what can we expect in the future?

Today's Players

The majority of today’s biggest tech players and entertainment companies are researching the field of synthetic media. Amazon is seeking to make Alexa’s voice more lifelike, Disney’s exploring how to use face-swapping tech in films, and hardware players such as Nvidia are pushing the bounds of synthetic avatars as well as services for film and television production.

While some organizations seek to improve the quality of entertainment and commercial applications, others such as Microsoft and DARPA are working hard to develop solutions in a tech arms race to help tell the fake from the real!

Much of the underlying synthetic media and deepfake software is open source (e.g. StyleGAN), and it has been picked up and built upon by start-up companies exploring specific niches in the wide synthetic media marketplace. Examples include the following:

- Wombo is a popular app that lip-syncs audio to turn still selfies into personalized music videos

- Avatarify does much the same to create “magical singing portraits with your friends”!

- FaceApp uses AI to transform selfies, including tools to make faces look younger or older.

- Reface, with over 100 million downloads and voted app of the year on Google Play, is one of the best-known video face-swapping tools. Many examples of what it can do are found on YouTube, TikTok, and Instagram face swaps video.

- MyHeritage makes historic photos “come to life”, animating them to talk and interact.

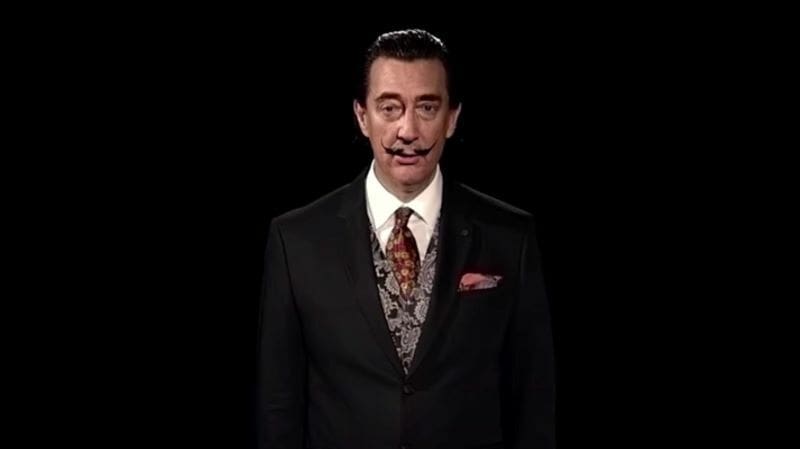

Desktop and cloud-based solutions offer more sophisticated solutions. Synthesia is a leading provider of synthetic avatars used for training, customer services, video production, and more. Users can choose from a ready-made avatar (as in the example above) or, after a 40-minute-long recording session, have a lifelike equivalent made of themselves. EY, the accounting giant formerly known as Ernst & Young, has been using these for sales pitches. Others have been using them to create video training in multiple languages, with voices generated automatically from typed text.

In many cases, synthesizing voice alone is the main goal. Increasingly, realistic voice generation has applications in everything from customer services, automatic translation, and more. Example players include Respeecher and Resemble AI. The former can change a user’s voice to that of another, manipulate its age, or translate to another language, while the latter allows users to create their own synthetic voices, including lifelike renditions of their own voice automatically reading any text that’s presented to it.

From these examples and more, we can see the breadth of potential applications. The exact execution may vary, but the underlying techniques share the same foundations.

How Deepfakes Are Made

Classic computer manipulation of images uses complex algorithms programmed with traditional software. These algorithms are enormously complex, and it’s both difficult and time-consuming to characterize all of the subtle variables that make a face, voice, or scene in order to make them look realistic. As such, modern computer science techniques take a different approach.

Training Data

Deep learning software, a form of AI implemented using neural networks, gets around heavy algorithm complexity by itself “learning” the nuances. In effect, it’s exposed to vast volumes of training data (i.e. images, sounds, and other source data) from which it identifies key features using processes not dissimilar to those used by our own brains.

To make a convincing fake video, large volumes of video, still images, voice recordings, and sometimes even body scans are required as training inputs. Synthesia’s clients, for example, are filmed for roughly 40 minutes as they read from a prepared script to provide the data required by their neural networks.

The large volume of existing content available for politicians and celebrities short-circuits this approach, meaning the “cloning” process can be done without their knowledge or consent!

Latent Spaces

The features collected from training data are “encoded” mathematically in latent spaces, where all the information that needs to be known about the training data is compressed and organized so that it can be reused or combined with other data. Typical systems will combine multiple latent spaces.

For example, a latent space that has learned the characteristics of aging can be combined with another that was trained with a specific individual’s face in order to generate images of that individual appearing older or younger. Check out this example of a future, elderly David Beckham appearing in a video.

Armed with enough learned data, deepfake technology can create remarkably convincing synthetic alternatives. A side-by-side comparison of the original de-aging used in The Irishman with a more modern and much easier-to-implement deepfake version shows just how effective this can be.

Limitations

Despite impressive results, deepfake AI isn’t smart. It has no awareness that a face is a face or that a tree is a tree. A multitude of elements can betray the fake from the real. These include subtleties of lighting and shadows, eyes that blink in an unrealistic way, or subtle nuances of facial expression or intonation of voice. In the quest for ever more accurate realism, all of these need to be learned and cleverly combined to make a compelling deepfake.

Apps implemented in phones get around this issue by lowering the quality, limiting what is simulated, and making other assumptions about the output. But even these use multiple networks that work in different ways and serve different purposes.

Below, we’ll take a closer look at what’s involved in the synthesis of images and video (including face swapping and manipulation) as well as audio and speech (including entirely artificial voices).

Image & Video

As we’ve seen, machine learning algorithms work by being repeatedly exposed to vast sets of training data. There are many types of neural networks that can do this, but two in particular stand out.

The first is the impressively named variational autoencoder (VAE). Presented with multiple images, it learns how to distinguish key features in a probabilistic way (as opposed to less complex deterministic approaches). This is important because it allows the network to generalize what it has learned and makes it easier to, for example, mix the features of one face with those learned from another. VAEs formed the core of most early face-swapping software. Their outputs, however, could sometimes be too general and their “fakeness” easy to spot.

To combat this, generative adversarial networks (GANs) have become popular since their conception in 2017. In essence, two neural networks are trained. One, the “discriminator”, is used to determine how realistic an image created by the other (“generator”) network is. Any parts that don’t seem right are highlighted, then the generating neural network adjusts itself and repeatedly tries again until an optimal version is achieved. In this way, GANs significantly increase the level of realism.

In practice, the process is much more involved, with several other types of neural networks typically involved to classify different image features (such as age, sex, or facial expression), handle frame transitions in video, and streamline what is otherwise a mathematically slow process.

To see what GANs are capable of, check out the amazing examples on This Person Does Not Exist, which hosts a gallery of remarkably realistic synthetically generated faces. The technology has also been used to bring Salvador Dali back to virtual life and introduce exhibits in the museum that highlights his works and life.

It’s also worth noting that the same technology can also be used for recreating notable genres of portrait painting, recognizing and changing scenes such as landscapes, and even applying transformations to animal faces and bodies, as demonstrated by Nvidia’s StyleGAN2 software.

Audio & Speech

Speech synthesis is an exceptionally hard challenge for computer technology, and most deepfake videos still use real human voice-overs as a result. Conventional approaches have advanced enormously since the primitive speech synthesis used by Stephen Hawking, but the emergence of deep learning is transforming what’s possible.

Traditionally, snippets of recorded voice were strung together, using complicated algorithms. Instead, deep learning figures out the tonal nuances of speech in order to reconstruct spoken words, yielding more realistic results.

The time-based nature of audio makes this a more complex challenge, calling for specially designed convolutional neural networks and other dedicated audio circuitry. These took a huge step forwards in 2016 when London-based DeepMind developed the concept of WaveNet, which is capable of directly generating realistic audio. Since then, WaveNet performance has been improved by over 1,000 times, and GAN-like techniques have been applied to refine the generated audio outputs.

Typical modern speech synthesis systems are complex – being capable of conveying emotional nuance, accents, and even the complexities of singing – and they’re fast approaching levels indistinguishable from actual speech. As with video, audio applications can either modify a recorded voice to give it the characteristics of another, as focused on by companies such as Respeecher. Alternatively, they can be used to create an entirely synthetic voice from text, such as Speechelo.

Looking to the Future

The positive applications of synthetic media are clear to see. Those who have lost the ability to speak can be given a new voice. Movies and films can be convincingly dubbed into new languages and retain the original actors’ voices. Software-based customer service agents can interact in a more engaging way. The potential applications are numerous.

On the downside, even low-quality fakes (or “cheapfakes”) have the ability to promote political misinformation or be used for fraud and other malicious uses. A fake video of the Greek Finance Minister caused great controversy in Germany in 2017, and in 2019, a fake image of an Australian soldier caused a diplomatic incident with China. Had these examples been produced with deepfake technology, they might have been more difficult to contest.

In addition to concerns about the potential to disguise fake images or recordings as real, the emergence of convincing deepfakes also has the potential to erode public confidence in genuine media. Consequently, many social media platforms have banned deepfakes and are engaging in research to quickly identify them.

Others, including IBM, have speculated on the use of blockchain technology to validate what is real. There is, however, no easy answer, and increased awareness of what is possible is probably one of the best immediate defenses.

Deepfakes also raise other interesting questions about ownership and copyright. For example, actors often earn ongoing royalties from their performances, but how does that change if entire appearances can be generated or modified synthetically without their direct involvement?

In the meantime, synthetic media and related technologies continue to improve at a rapid pace. The market may still be nascent, but it looks set to transform many areas. It will likely change much about how content is made and consumed. It will also open entirely new avenues of film- and game-making, as well as enable radically new human-computer interfaces.

In the future, seeing might not always be believing, but it will certainly be compelling!

License: The text of "Deepfake: What’s the Technology Behind It?" by All3DP is licensed under a Creative Commons Attribution 4.0 International License.