We often associate the terms microprocessor and microcontroller with one another, and sometimes even use them interchangeably. However, those who really understand these two chips know that there are some clear distinctions, in spite of some shared characteristics.

A microcontroller is like a miniaturized computer on a single chip. All the elements of a computer, such as CPU, memory, timers, and registers, are tightly integrated with each other. A microprocessor, on the other hand, is just the computing element (the CPU) in a computer. All the other hardware necessary for a device to function is attached externally. So, you could say a microcontroller is a really specced-up microprocessor, but a microprocessor can’t be called a microcontroller.

The lines between these two components do overlap sometimes, but mostly they’re used in different ways, and once you understand the features of each, you’ll never confuse them again! In this article, we’ll look at the differences between both chips and what makes each of them unique in its own way.

A Micro History

The microprocessor and the microcontroller are among the most significant inventions of the 20th century. A lot of modern computing is concerned with improving and iterating upon the basics of both these technologies.

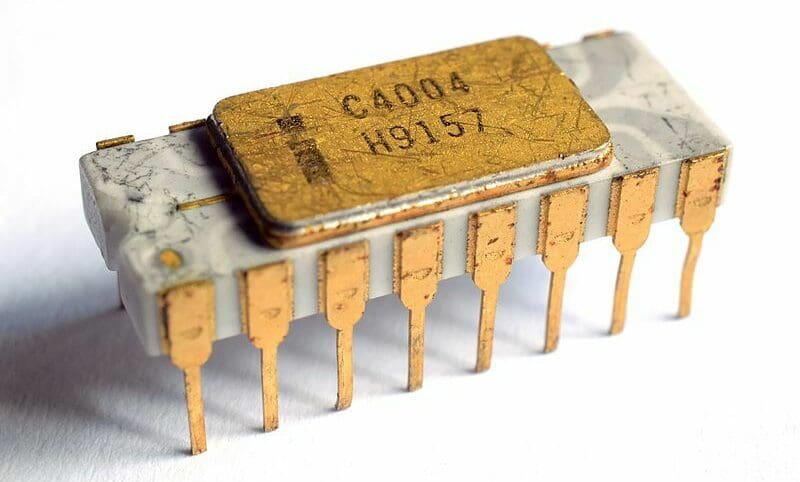

Intel invented the first true commercially available microprocessor. Invented in 1971, the Intel 4004 laid the foundation for modern-day computers. The 4004 was just a 4-bit microprocessor, yet it represented a significant leap in the computer industry.

One of the first applications of these microprocessors was Busicom calculators. It’s estimated that Busicom sold around a 100,000 calculators with the Intel 4004 inside them.

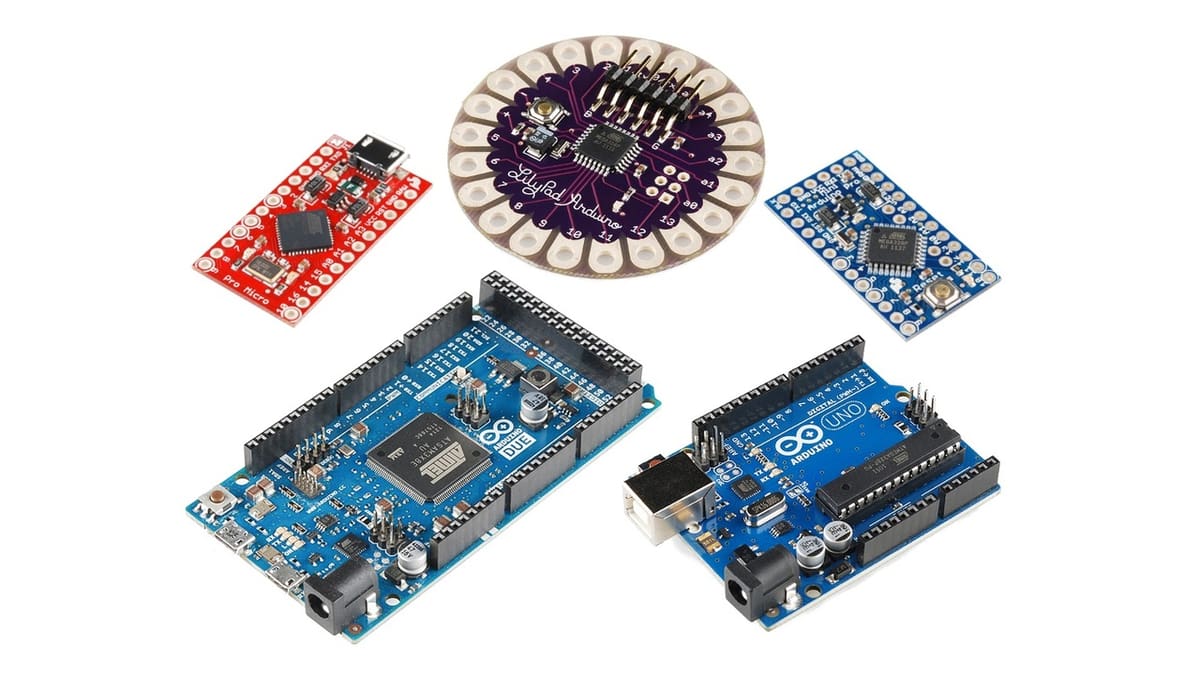

Around the same time that Intel was developing its microprocessor, Texas Instruments was working on similar technology: microcontrollers. The key difference between the two innovations was the way their components interfaced. Texas Instruments released the TMS 1000 microcontrollers in 1974, which integrated everything in a single chip. Initially, Texas instruments built the microcontrollers for calculators, but soon they outgrew this application and now we can find microcontrollers in almost everything electronic.

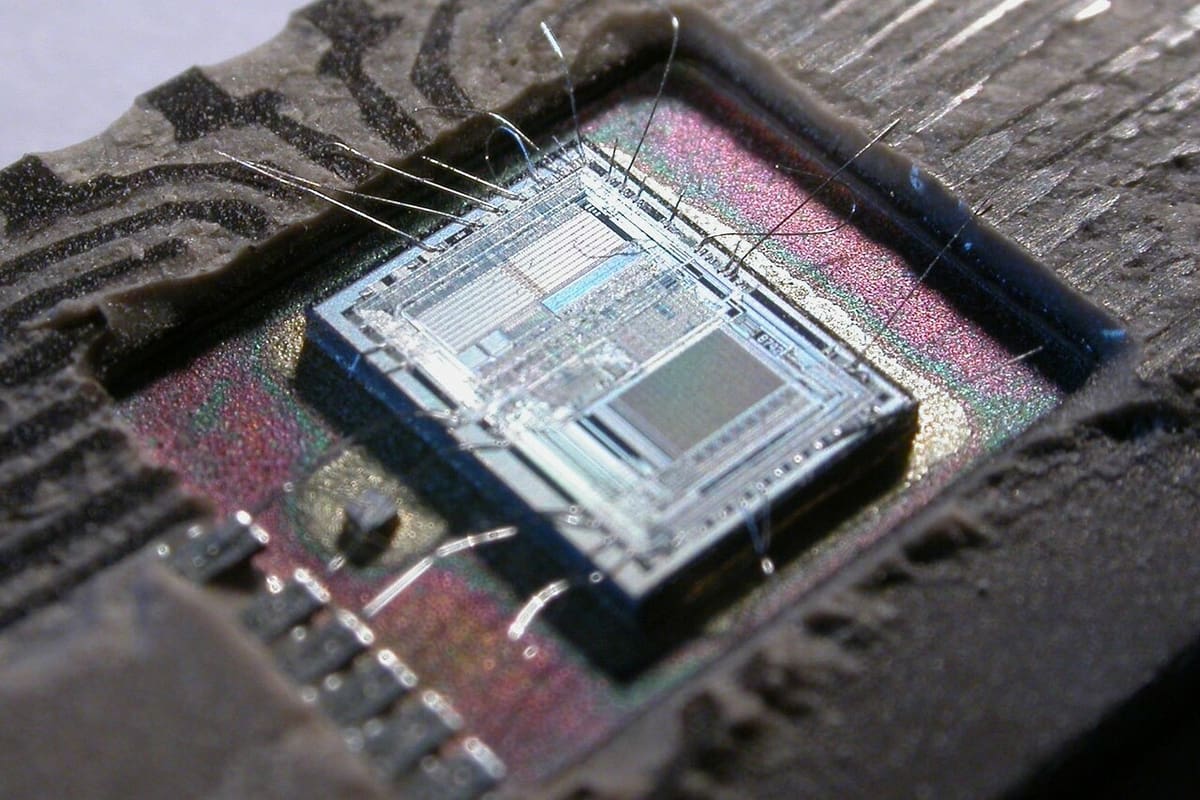

Microprocessing Elements

The microprocessor is the computing element of a system. It takes a set of instructions stored in memory, executes it, and delivers the resulting outputs to peripheral or external devices. In other words, microprocessors just do the computing; they’re not responsible for any other operations you might associate with computers, including data transfer, data storage, visual output, audio output, and so on.

For example, even just using the calculator application on your computer would make use of, among other devices, a keyboard, detecting button presses; a data bus, transferring the digital signals between devices; random-access memory (RAM), storing temporary application information (including intermediate values for calculations); and a graphics processor, generating the signals to be interpreted by your screen. The microprocessor, meanwhile, would only be responsible for processing the information required to coordinate the operations (based on operating system and firmware code) and perform the calculations (based on calculator application’s code).

Microprocessors comprise three major elements:

- Arithmetic and logic unit (ALU): The ALU works out arithmetical operations like addition, multiplication, division, and subtraction. It also handles logical operations like “or”, “nor”, “and”, and so on. The ALU is where the nitty-gritty bits and bytes computing occurs.

- Register array: The register array is used to store any temporary data that’s required by the ALU. In a way, the register array acts as close and fast-action flash memory for the ALU.

- Control unit: Last, the control unit directs the flow of data in and out of the microprocessor as well as timing and control signals required by the elements within it.

These elements form the core of a microprocessor. However, the microprocessor by itself is of no use. It needs to be connected with external memory and other peripherals in order to do anything.

Microcontrolling Elements

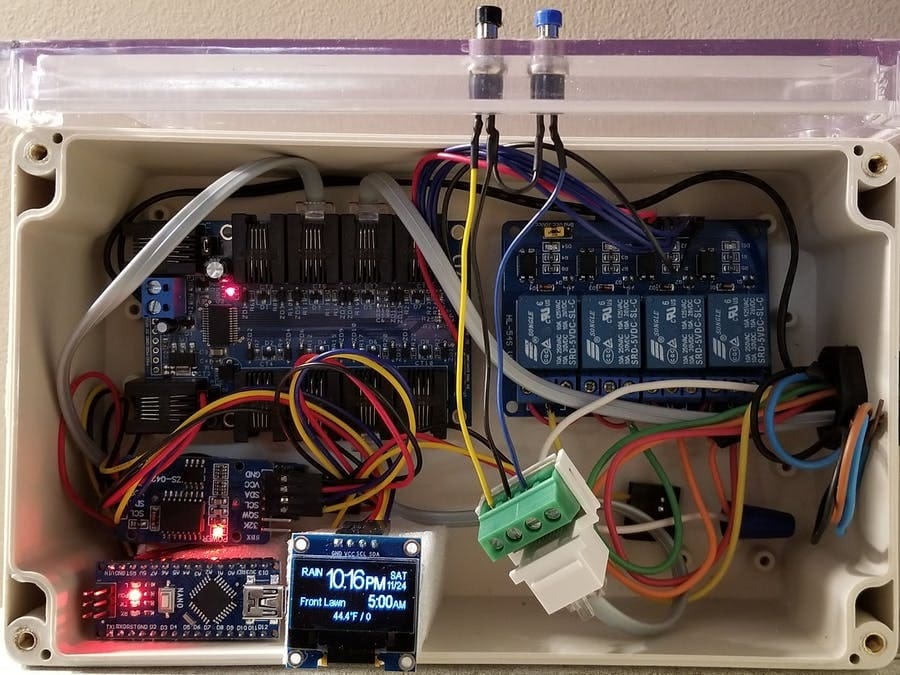

The core of how a microcontroller works is similar to a microprocessor. After all, a microcontroller contains a microprocessor at its heart. The major difference lies in the architecture and the additional components that are present.

Microcontrollers contain elements that interface with each other for the proper functioning of a device:

- Microprocessor: We refer to the microprocessor that forms part of the microcontroller as the CPU of the system. This is where the computing takes place. However, even though the working remains the same, these microprocessing units are far inferior to the standalone microprocessors we’ve so far referred to in this article.

- Memory: A microcontroller’s memory comprises program memory (ROM) and data memory (RAM). The ROM is where the program is stored as instruction sets. RAM is the memory that’s used for storing any variables when the CPU is operational.

- Timers & counters: Timers and counters are used for all the clocking operations in the microcontroller. This includes functions such as pulse-width modulation, clock control, and frequency measurements.

- Converters: Analog-to-digital converters (ADC) and digital-to-analog converters (DAC) are used for converting the input and output signals in the microcontroller, respectively.

Now you have a clear idea of how these chips differ in construction. The functional microcontroller is designed with a specific task in mind, while the microprocessor is a standalone part intended to be combined with other elements (in e.g. a microcontroller or a PC).

Notable Differences

Today’s microprocessors and microcontrollers share the same DNA – that is, they’re composed of similar elements. Yet, the way each of them functions and is applied makes all the difference. Let’s look at how they really differ in terms of practical usage.

Computing Power

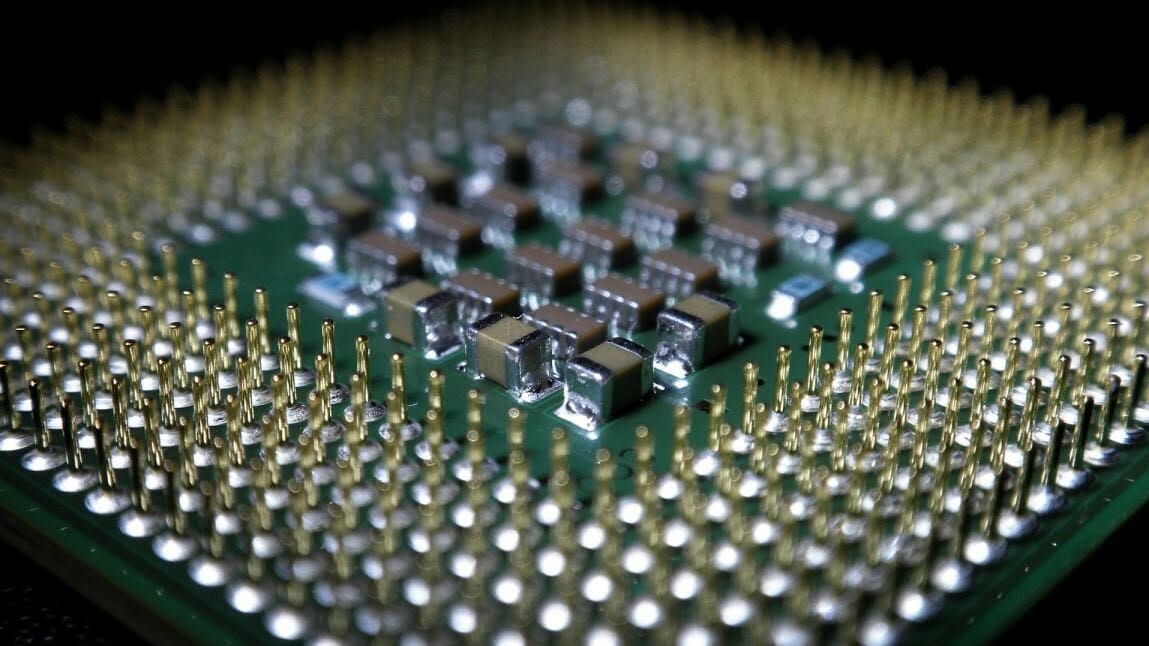

Microprocessors are the clear winners in raw computing power. These days, clock speeds can range from 1 to almost 4 GHz. This enables microprocessors to compute very quickly and to execute operations efficiently. Microprocessors, paired with the right peripheral and external devices, are suitable for any application; nothing is pre-defined.

Microcontrollers, on the other hand, are limited in performance and speed due mostly to their size. This is because they’re meant to perform the same, basic tasks. Thus, they’re most cost effective for their application and are generally clocked at much lower speeds when compared to microprocessors.

Memory

A microprocessor’s memory is externally connected. This allows for custom system design and easy upgrades. As the memory is external, it’s up to the user to choose the size and speed of the RAM and ROM for the system.

This differs from microcontrollers, where the memory is integrated along with the CPU in the chip itself. This limits the size of the memory; the flash memory of a microcontroller is often limited to just 2 MB. However, as the memory and the CPU are closely integrated, the memory operation speeds might be slightly faster in a microcontroller.

Operating Systems

As they’re not intended for any specific task, microprocessors are often paired with complex operating systems for generic functionality. OSs like Windows, Linux, MacOS, and Android are comparatively resource-intensive and hence can only run on a microprocessor. While this means that using a microprocessor lets you achieve various tasks, it also indicates that you need a complex piece of software for your operations.

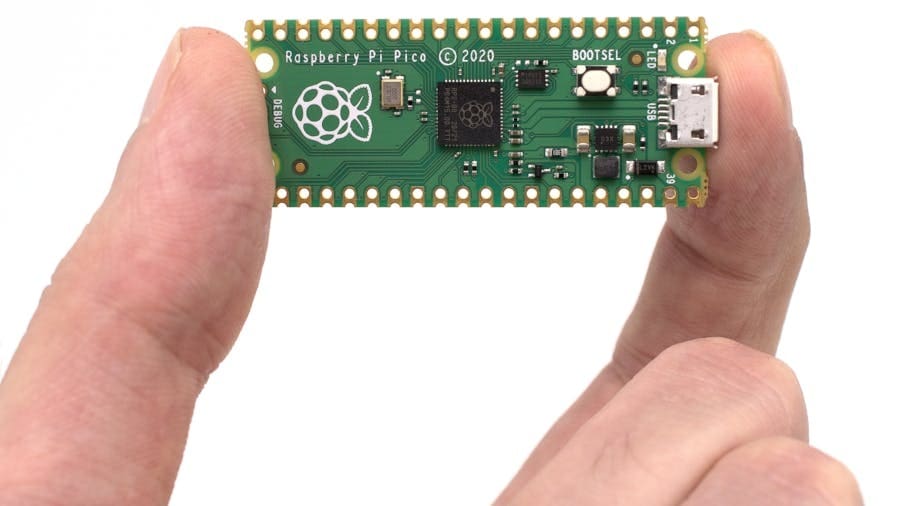

Microcontrollers, in comparison, don’t typically need a full-scale OS to function, with their applications running directly on firmware. This usually means that a microcontroller is easy to program, especially for small, repetitive applications. There’s also the option to use real-time operating systems (RTOS) with some higher-level microcontrollers for applications that require a more complex control system.

Power

Microprocessors consume more energy when compared to the efficiency of a microcontroller’s single chip. This shouldn’t come as a surprise, as microprocessors operate at much higher speeds and are interfaced with external components. You’ll rarely find microprocessors in applications where low energy use is a requirement.

Microcontrollers consume very little power because they’re designed with a specific task in mind and hence can be configured with only the necessary components. This saves on a lot of power, so you’ll often find microcontrollers at the heart of battery-powered devices.

Connectivity

Nowadays, you don’t need to worry about interfacing with either of these devices as they both have a range of connectivity options. However, the difference lies in the connectivity speeds.

Microprocessors can handle high-speed incoming data with ease, thus allowing them to be used with faster interfacing protocols such as Gigabit Ethernet and USB 3.0.

Microcontrollers are more limited in computing power and hence they process data at slower speeds. This also limits their connectivity speeds, so you’ll seldom find a microcontroller that’s connected with high-speed data transfer ports.

Size

This can be a controversial difference, but it’s still noteworthy. The size of a microprocessor is giant when compared to a microcontroller. The processor comprises billions of transistors in its CPU. It also needs to be interfaced externally with other components such as memory, ports, timers, and converters. Thus, the overall size of a PCB housing a microprocessor is comparatively large.

As a microcontroller houses everything within itself, on a single chip, the overall footprint is tiny. This makes it more versatile and is why microcontrollers have so many applications.

Cost

Microprocessors are the pinnacle of processing power, and are accordingly expensive when compared to microcontrollers. Furthermore, microprocessors can’t operate by themselves. They need external devices to work with them, thus adding more cost.

As microcontrollers generally include lower-speed hardware and limited (though targeted) functionality, they cost very little. All the elements that are required to execute a task are built right into a microcontroller. A key reason why microcontrollers became so popular is the amazing computing power they provide at a fraction of the cost. This makes them great for educational purposes as well as personal, DIY projects.

Final Thoughts

To give a final, basic analogy for the differences between a microprocessor and a microcontroller, we can simply look at how your computer and your washing machine work.

A computer’s tasks are varied, and the machine requires a lot of processing power to execute a wide variety of applications. A microprocessor is therefore used to perform many types of tasks efficiently.

With a washing machine, the task is fixed and the performance requirements are minimal. There’s no need for billions of transistors for raw processing power; a simple microcontroller has enough computing power to carry out washing clothes. Thus, a microcontroller performs a single task with complete perfection.

With this in mind, the next time you’re looking at building some simple and easy projects such as IoT devices, you’re clear about why you’re using a microcontroller and not the latest microprocessor.

License: The text of "Microprocessor vs Microcontroller: The Differences" by All3DP is licensed under a Creative Commons Attribution 4.0 International License.