To render something, by definition, is to bring it to life. Just like coloring a painting gives it the final look, in computer graphics, “rendering” is used to denote a process that generates the final image, with all the textures and lighting, for a 2D or 3D model. There are different ways in which rendering can be carried out, one of which is using graphics processing units (GPUs).

GPUs have thousands of small, low-powered cores that compute the data in a parallel manner, which benefits the rendering process. The parallel processing of a GPU allows it to calculate huge amounts of data with ease and in a speedy manner. However, speed and parallel processing are just the tip of the iceberg of GPU rendering.

In this article, we’ll go over everything that applies to GPU rendering: features, hardware, software, and everything in between. So, let’s get into GPU rendering.

GPU: An Introduction

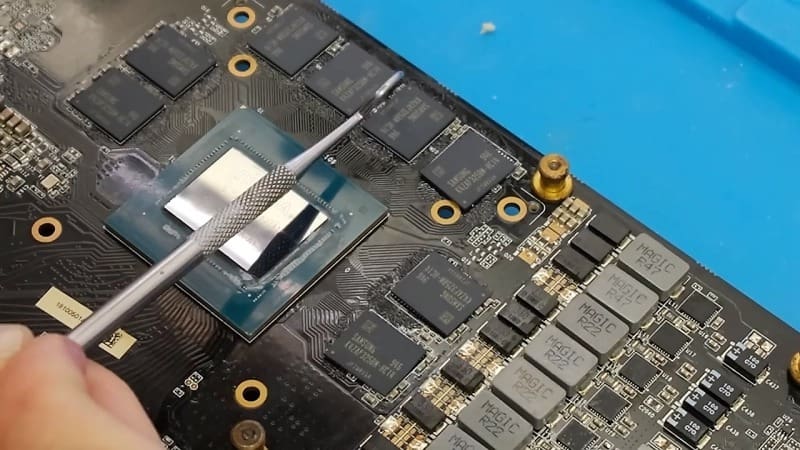

GPUs are an amazing piece of technology. In modern systems, we use GPUs for gaming, data mining, big data applications, AI, machine learning, and, obviously, rendering. These GPUs are dedicated units that are attached to the motherboard of a computer.

A single GPU can contain well over 10,000 small computing cores, which, as mentioned, are programmed to run tasks in a parallel manner. This ability to run tasks parallelly enables GPUs to deliver results that are visible in real time. They even have their own random-access memory (RAM) – known as VRAM (video RAM) – that’s used in GPU rendering.

GPUs are also purpose-built chips. This means that there are GPUs that are specifically designed to run games or to render. Thus, the performance of a single GPU might be better when used for rendering but not so much for gaming or to carry out other tasks.

Now that you’ve got a basic gist of what a GPU is, let’s begin with some of its features that are worth mentioning when it comes to rendering.

Rendering Features & Aspects

Over time, GPUs have grown from being just graphics accelerators to entire processing units. This evolution has come gradually, and with it have come some notable features and aspects of GPU-based rendering systems.

Speed

GPUs are designed for parallel processing, which takes advantage of multi-core performance. When you’re dealing with thousands of small cores, you get to experience a tremendous amount of speed. GPUs can render models much quicker than their CPU counterparts. This speed helps in real-time visualization of renders and a quicker reiteration process.

Optimization

Manufacturers build their GPUs with a certain task in mind. Some GPUs are optimized for gaming, some for rendering, some for designing, and so on. GPUs that are optimized for rendering can deliver consistent results faster, making them time-efficient, and also do a good job with rendering scenes.

Rising Tech

GPUs have come a long way since their inception. Every new generation of GPUs is an exponential upgrade over the previous ones. GPUs are updated more frequently than CPUs, which results in increased rendering performance each year.

Comparatively Low Costs

A high-end GPU such as the RTX 3090, still costs less than a high-end CPU like the Threadripper 3990x. GPUs are also easy to upscale, so you can easily combine one or more GPUs on a single system, with each dedicated to run specific tasks. The lower entry price of a GPU also means that more people can get their hands on one and start rendering within a budget.

Viewport Performance

Viewports are windows in which you can visualize the changes that take place based on your input parameters. A quicker viewport visualization translates into a speedy workflow. Now, as GPUs are great at running tasks simultaneously, this leads to a speedy execution of inputs, which results in faster viewport rendering. You can practically view the changes in real time.

Rendering Limitations

We’ve looked at some of the prominent benefits that GPU rendering provides. However, it’s not all bells and whistles for GPUs. There are also some caveats to GPU rendering, which has limited capabilities in certain scenarios.

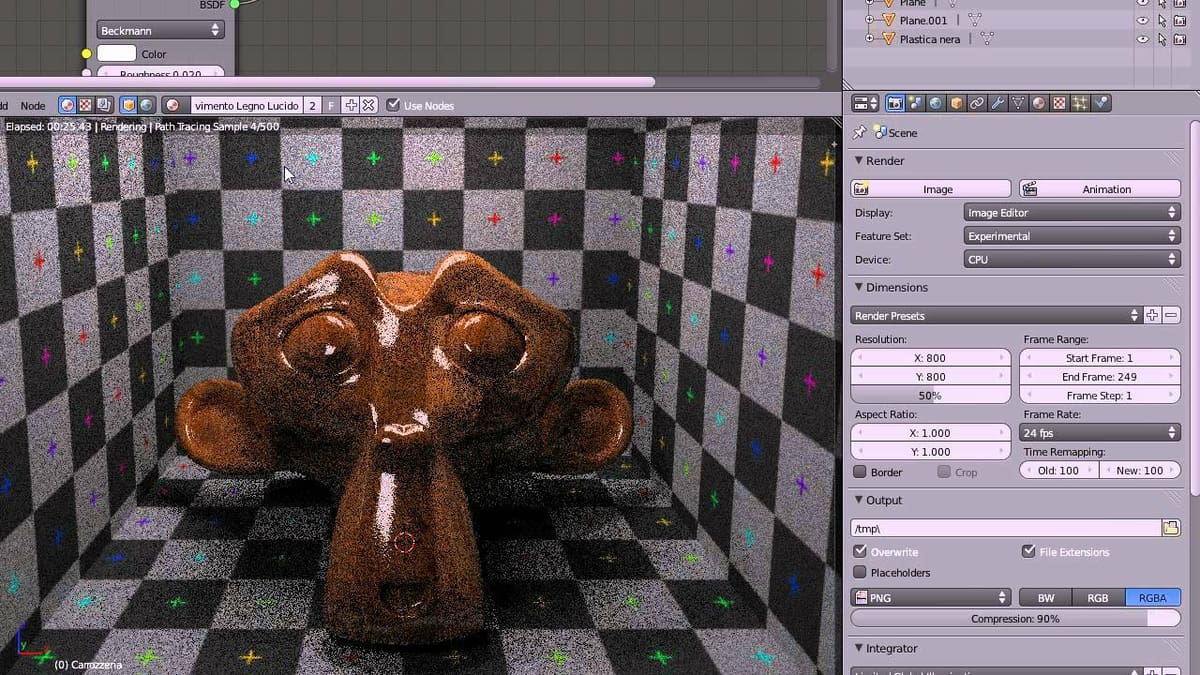

Quality

GPUs are known for their speed, but the same can’t be said for their quality of renders. GPUs can’t run a variety of instruction sets, like CPUs can. This limits the kinds of algorithms that they can execute and hence affects the quality of the final output. GPU renders have more noise (meaning they’re blurrier or grainier) compared to CPU renders.

Complexity

GPUs are purpose-built chips, which is both a boon and bane. Optimized for a certain set of tasks, GPUs falter when they’re presented with more complex and varied tasks. This leads to a limited variety of scenes that a GPU can process within its scope, as it’s not possible for it to render more complex, detailed scenes with many objects.

RAM Capabilities

GPUs are limited in terms of their RAM capacity. The 3090 has 24 GB of VRAM, while the maximum is on the Quadro RTX 8000, with 48 GB of VRAM. This still pales in comparison to CPU RAM capacities, where a single CPU can have access to almost 128 GB or even more RAM. The limited RAM capacity of a GPU constricts its ability to process complex environments with many elements in it. The GPU simply runs out of memory to store all the data, and then has to rely on the CPU RAM, which results in poorer GPU rendering performance. So, because of RAM capabilities, you’re limited in the number of elements in scenes that you can process.

Stability

GPUs are notorious for being unstable. If you’re running your display and your rendering software programs both on a single GPU, chances are that either your system might crash or your application software might become unresponsive. Driver updates, too, plague GPUs with their stability issues. Sometimes, GPUs might simply not be supported on certain render engines, which may then lead to additional compatibility issues. Overall, a GPU is dicey in terms of a stable system performance.

Rendering Systems

A rendering system comprises two components: rendering engines and rendering hardware. Both of these components should be selected to complement each other.

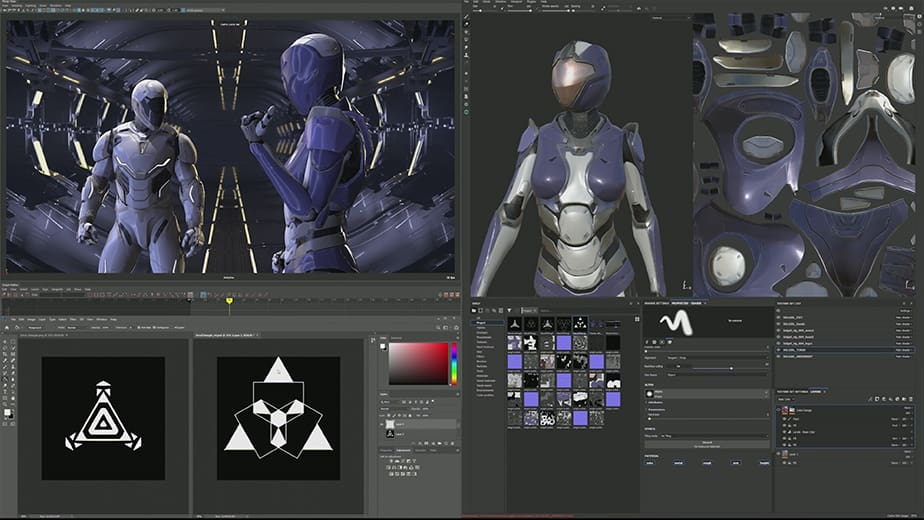

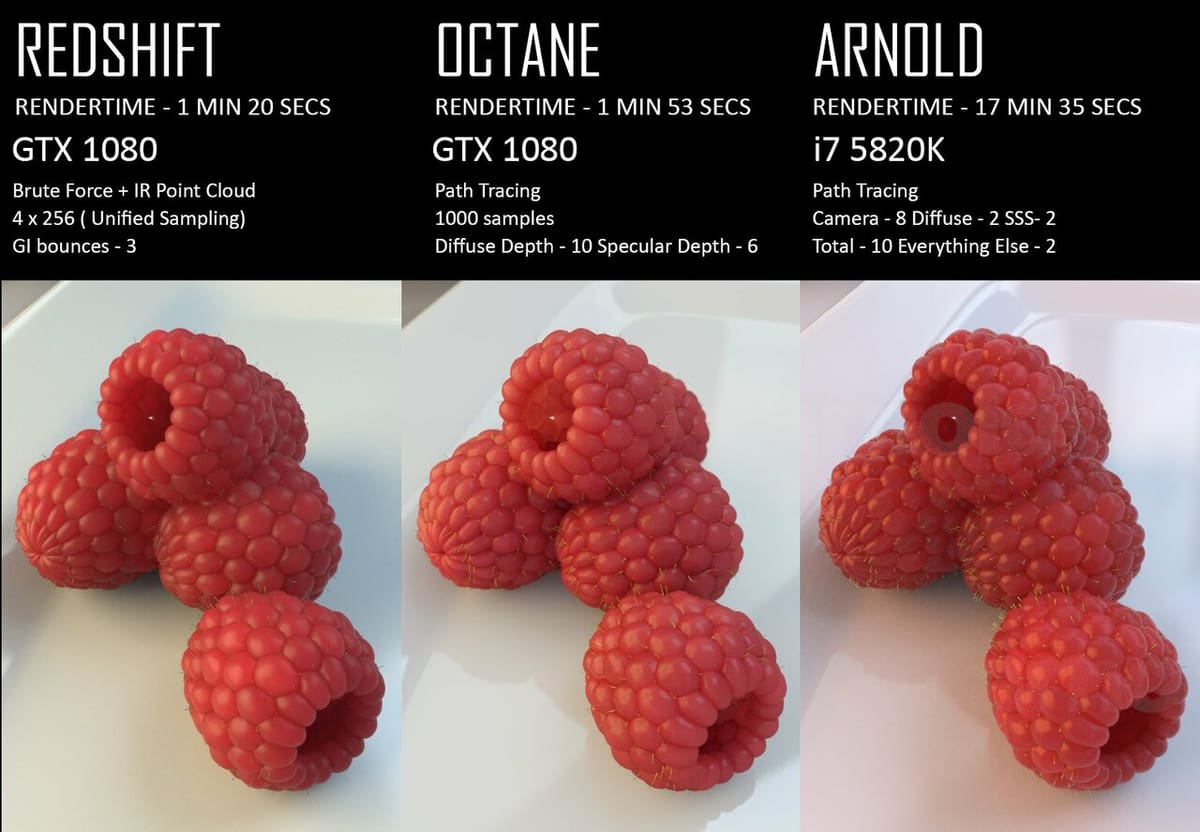

Rendering Engines

A good rendering engine can heavily influence your final render. The render engines turn your raw unprocessed image into a final high-quality rendered image. These engines are essentially plug-ins that work with various 3D rendering software programs and are loaded with features unique to them. A few noteworthy ones are the Octane, Redshift, V-Ray, and KeyShot.

Rendering Hardware

When we talk about the rendering hardware, our focus is on GPUs. As mentioned earlier, GPUs are purpose-built chips. So, even if a GPU has a higher VRAM such as the Quadro, it’s inferior in its performance when compared to a 3090 for rendering.

There’s a great guide by CG Director, which compares the performance of various GPUs with Octane and Redshift.

Rendering Framework

CUDA is a proprietary framework developed by Nvidia for their GPUs. Open Computer Language (OpenCL) is an open-source framework that’s run majorly by AMD GPUs.

Rendering engines such as Octane, Redshift, and even V-Ray, support only the CUDA framework, meaning you can only have Nvidia GPUs in your workflow, whereas Cinema 4D (ProRender) and Blender (Cycles) support both OpenCL and CUDA.

The choice of a particular rendering software may just boil down to the CUDA and OpenCL factors. Software such as Final Cut Pro X and Cinema 4D only support the OpenCL framework, whilst Adobe SpeedGrade supports only the CUDA framework. So, when setting up your GPU rendering workflow, you have to keep in mind not only your choice of GPU, but also the framework needed.

Happy Rendering

In summary, GPU rendering is on par with some of the best quality CPU rendering out there. The speed, low cost, easy scalability, and optimized performance make it a lucrative choice for rendering in many workflow scenarios.

With constant developments and improving technologies, GPUs might even replace CPU rendering systems one day. The choice of hardware and software are also increasing, and as more and more updates take place within the GPU industry, the GPU rendering scenario is surely to improve on its shortcomings.

License: The text of "What Is GPU Rendering? – Simply Explained" by All3DP is licensed under a Creative Commons Attribution 4.0 International License.