MIT Media Lab just figured out how to make 3D imaging 1,000 times better. It is more affordable and better than most professional industrial high-precision 3D scanners. The new technique is called Polarized 3D.

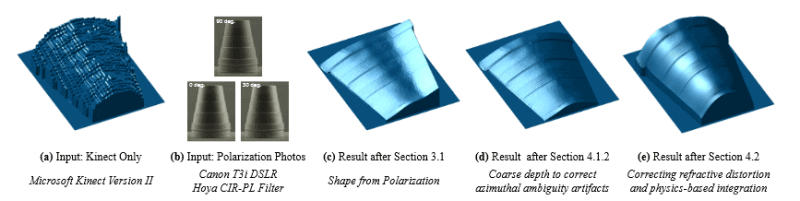

To achieve this, they combined low-cost 3D scanning techniques and applied a new algorithm. First, they were measuring the exact orientation of light that bounces off of an object. This data was then merged with existing depth information, taken through three different polarization filters.

The researchers’ experimental setup consisted of a Microsoft Kinect — which gauges depth using reflection time — with an ordinary polarizing photographic lens placed in front of its camera. In each experiment, the researchers took three photos of an object, rotating the polarizing filter each time, and their algorithms compared the light intensities of the resulting images.

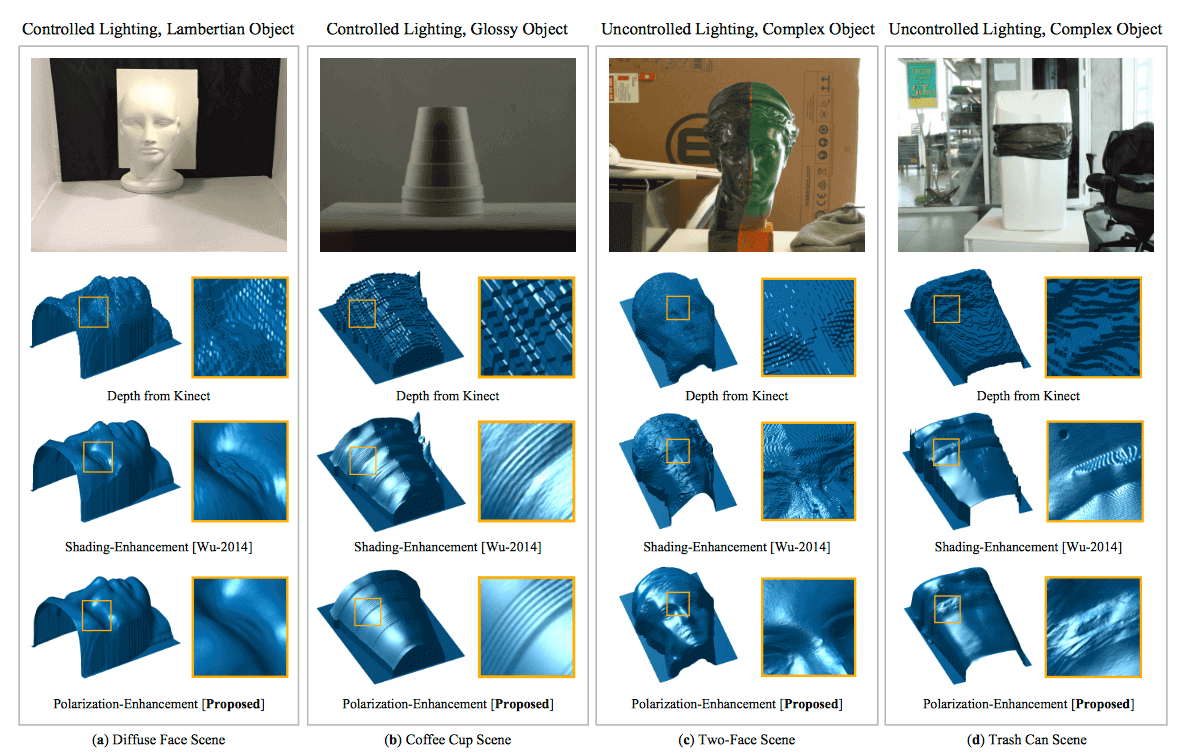

On its own, at a distance of several meters, the Kinect can resolve physical features as small as a centimeter or so across. But with the addition of the polarization information, the researchers’ system could resolve features in the range of hundreds of micrometers, or one-thousandth the size.

Potentially Disruptive For The Professional 3D Scanners

Why is this exciting news? Because you can use a regular smartphone camera plus a low-cost sensor like Microsoft Kinect to get the job done. Think about a 3D camera built into your smartphone, which offers the ability to snap a photo of an object and later 3D print a replica.

“Today, they can miniaturize 3D cameras to fit on cellphones,” says Achuta Kadambi, a PhD student in the MIT Media Lab and one of the system’s developers. “But they make compromises to the 3D sensing, leading to very coarse recovery of geometry. That’s a natural application for polarization, because you can still use a low-quality sensor, and adding a polarizing filter gives you something that’s better than many machine-shop laser scanners.”

Polarization plus depth sensing

So all you need are three separate filters for your camera, and total of four photos (3D plus three polarization shots at different levels). Then you have a healthy amount of data for the number crunching to get the final shape. The scientists used a standard graphics chip to calculate the 3D image in a range of hundreds of micrometers – no fancy supercomputer needed.

The image quality is astonishing. It surpasses existing portable 3D scanning technologies by far. The extent to which the breakthrough hinges upon existing technology is well expressed by the fact that the MIT researchers used a Kinect to come up with it.

This doesn’t mean great scanning technologies will be obsolete starting tomorrow. Hand-held 3D scanners like the $10.000 Artec EVA and 3D selfie booths will deliver great results. But in the long run, 3D scanning might get a lot better and become more personal – and that’s good news for everyone.

If you want to dig deeper, MIT Media Lab is offering up the full paper for free. Also, there‘s more information on MIT News on this topic.

License: The text of "MIT Just Made Cheap 3D Scanners 1000 Times Better" by All3DP is licensed under a Creative Commons Attribution 4.0 International License.